Neural Relighting and Expression Transfer on Video Portraits

Youjia Wang* Taotao Zhou* Minzhang Li Teng Xu Lan Xu Jingyi Yu

ShanghaiTech University

Introduction

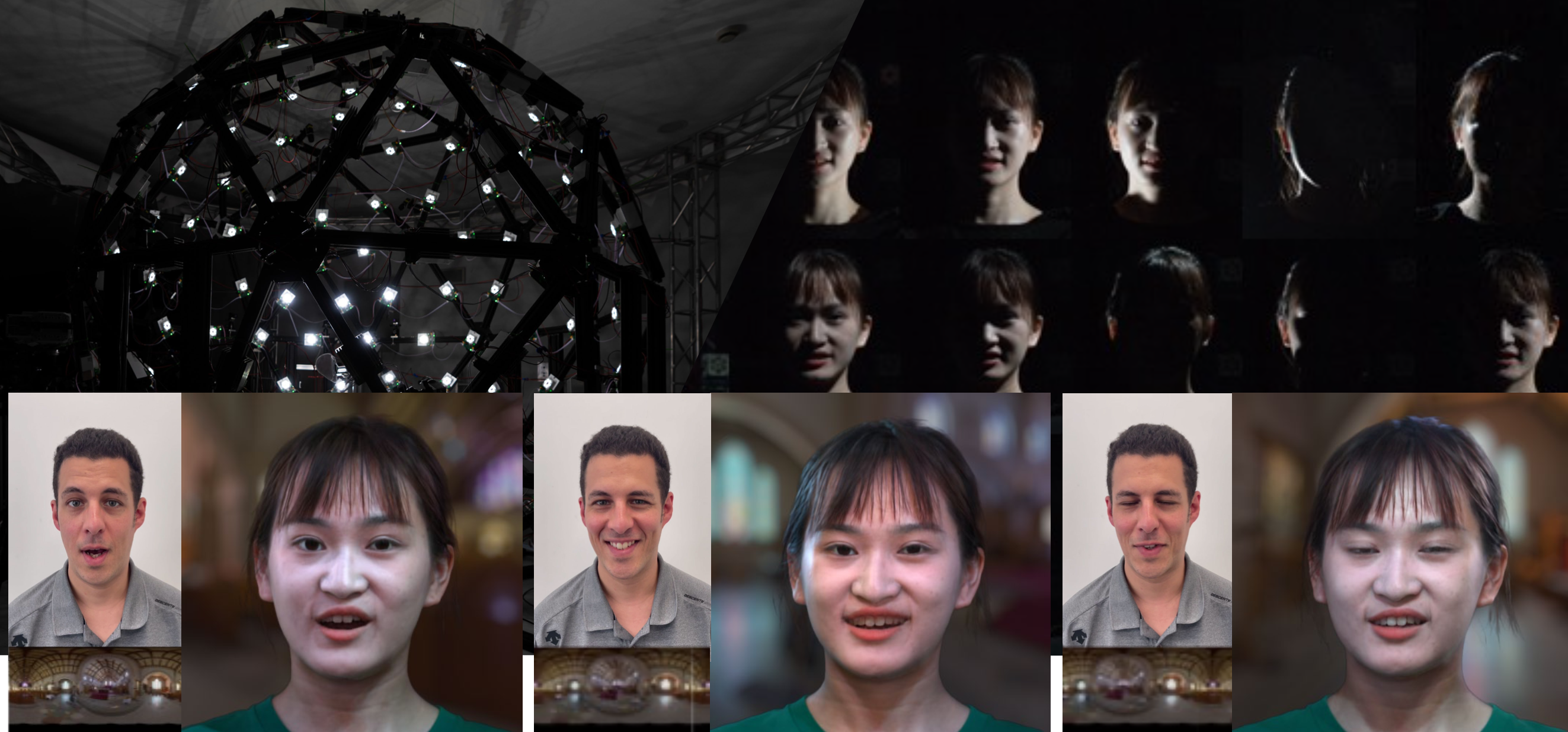

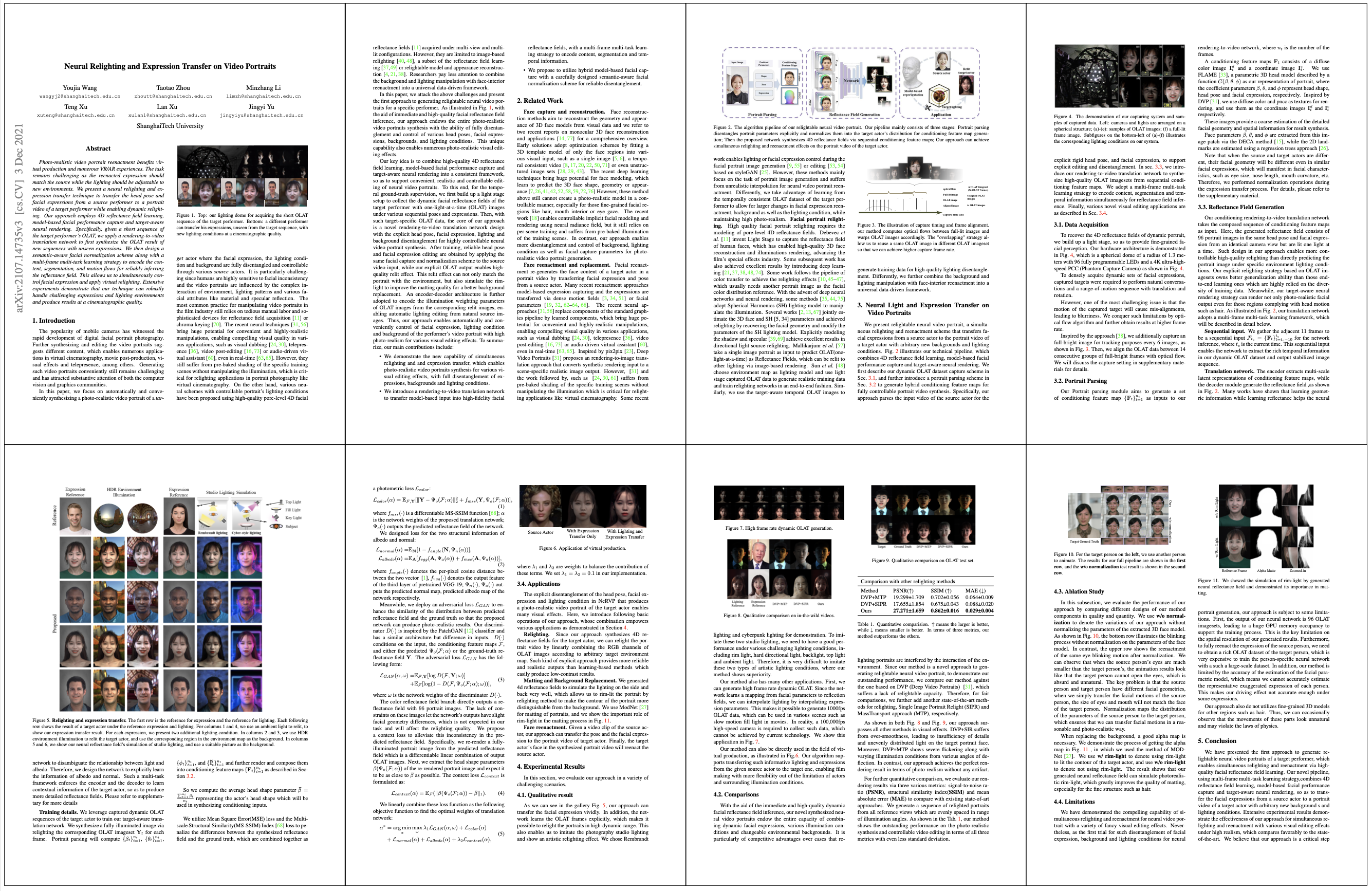

we present a relightable neural video portrait, a simultaneous relighting and reenactment scheme that transfers the facial expressions from a source actor to a portrait video of a target actor with arbitrary new backgrounds and lighting conditions. Our approach combines 4D reflectance field learning, model-based facial performance capture, and target-aware neural rendering. Specifically, we adopt a rendering-to-video translation network to first synthesize high-quality OLAT image datasets from hybrid facial performance capture results. We then design a semantic-aware facial normalization scheme to enable reliable explicit control as well as a multi-frame multi-task learning strategy to encode the content, segmentation, and temporal information simultaneously for high-quality reflectance field inference. After training, our approach further enables photo-realistic and controllable video portrait editing on the target performer. Reliable face poses and expression editing is obtained by applying the same hybrid facial capture and normalization scheme to the source video input, while our OLAT output enables a high-quality relit effect. Furthermore, through the explicit learning of normal and albedo, our network can express reflectance information more accurately. With the ability to achieve simultaneous relighting and reenactment, we are able to improve the realism in a variety of virtual production and video rewrite applications.

Video

Publication

Paper - ArXiv - pdf (abs) | supplementary (pdf)

If you find our work useful, please consider citing it:

@Cite as: arXiv:2107.14735 [cs.CV]